These are uncertain times for many in the language services and localization industry. There was a palpable air of concern and angst in Montreal. This is to be expected given all the changes that we face from so many directions:

- · Disruption of established government and trade policies

- · AI hype in general is threatening many white-collar jobs

- · Unrealizable expectations about the potential capabilities of AI technology from C-suite leaders that cannot be delivered

- · An emerging global economic slowdown after an already tough business year

- · High levels of economic and business uncertainty

The day after the

conference, I saw the following in my inbox from CSA Research:

I also saw an announcement for an upcoming webinar from

Women In Localization with the theme: Maintaining motivation during disruption,

which added the byline, "with

constant change, staying motivated can be hard." There is concern in the industry far beyond

the community present at GALA.

However, the keynote presentation by Daniel Lamarre, CEO of the Cirque du Soleil Entertainment Group, provided a memorable, uplifting, and inspiring message to the attendees. I rate it as one of the best, if not THE best, keynotes in all the years I have been attending localization conferences. His message was relevant, authentic, and realistically optimistic while speaking to the heart.

He is uniquely qualified to speak to a doomy, gloomy audience, as he also faces challenges and has risen from what seemed insurmountable odds. In response to pandemic shutdowns in March 2020, Cirque du Soleil suspended all 44 active shows worldwide and temporarily laid off 4,679 employees, 95% of its workforce. Annualized revenue dropped from over $1 billion to zero almost overnight. And today, Cirque has to work to remain relevant to digitally obsessed world where many youth have never experienced a circus.

He engineered a recovery, and by early 2023/2024, revenue had climbed back to the pre-pandemic level of approximately $1 billion, though growth is expected to moderate around this level for the next couple of years. Leadership stated the recovery exceeded expectations according to financial market observers.For someone whose primary focus is to find outstanding artists from around the world, provide them with a regular living, and

curate entertainment that leaves the audience enthralled and inspired, he had a

clear understanding of the challenges that business translation professionals

might have in this age of AI madness. Somewhat similar to what his organization faced during the pandemic, when the possibility of large

audiences congregating to watch a magical musical circus-like performance in

45 cities across the world was an impossibility.

The heart of his message was about building the right mindset

as we face challenges, to break through, which he said begins with continual

investment in research and development and a strong focus on creativity. This

is very much the ethos of Cirque and pervades their overall approach and

culture. A summarized highlight of his message follows:

- · Creativity is foundational since it leads to innovation which in turn often results in market leadership.

- · Ongoing and regular reflection is essential to building creativity.

- · Deep curiosity and the questions that it generates are a building block to discovering successful outcomes.

- · While it is important to focus on the problem to get a clear definition of the challenge, it is even more important to focus creatively on possible solutions.

- · Investment in research and development (e.g., AI impact on translation-related processes) and organizational creativity is essential to finding your value-add in challenging times.

- · Debate is a foundation of evolutionary creativity, and a culture that encourages debate is most likely to find the best outcomes and the best ideas that are not possible with hierarchical mandates.

- · Finding a purpose is more likely to create successful outcomes than goals and objectives.

- · The best ideas will come from a clear mandate, debate, and a defined sandbox rather than through unstructured, frequent meetings with rambling, unfocused discussions.

- · He encouraged the audience to focus much more on communicating the value-add of the business.

I’m not sure I have captured the essence effectively, and as

they say, “you had to be there,” as he was successful in lifting everyone’s

spirits. His ability to build connections and empathy was indeed unique. He

closed by encouraging the GALA community to make more concerted and active efforts

to raise their profile and communicate LSP/localization value creation

characteristics in the marketplace aggressively, given the unrelenting AI hype.

Raising the LSP Industry Profile

This keynote triggered a recurring theme that attendees

raised across several sessions about finding a better way to describe the value

of service/product offerings to the marketplace. There were different opinions

and views on whether translation, localization, langops, or something else would

be the most effective professional self-descriptor to build a value-oriented communication

message.

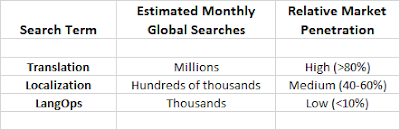

I decided to do some basic research on this subject, via LLM,

and I have mixed feelings about the utility of the output, as it lacks insight

and understanding. I summarize the unedited responses from 3 different LLM

models (which all had very similar results) below:

GPT 4.1

Summary

Normalized Google Trends Frequency (2022-2025)

|

|

Avg Absolute Monthly Search Volume |

Normalized Monthly Searches 0-100 Scale 3-Year Mean |

|

Translation |

1,000,000 |

79.9 |

|

Localization |

200,000 |

40.1 |

|

LangOps |

2,000 |

5.2 |

Which term provides the greatest reach?

- Translation is by far the most recognized and searched term

globally. It is used by major platforms like Google, DeepL, and Microsoft

for their consumer-facing services, which reinforces its dominance and

public familiarity.

- Localization is important for industry professionals and

clients needing cultural adaptation and more sophisticated services, but it has a narrower audience.

- LangOps is emerging in industry circles as a concept for

scalable, AI-driven language operations, but its search volume and public

awareness remain very low.

Key Points:

- If your goal is maximum visibility and broad customer acquisition,

“translation” is the most effective term. It captures the widest audience, aligns with consumer

expectations, and is the default for everyday users seeking language

services.

- “Localization” is valuable for targeting clients who

require cultural adaptation and market-specific solutions, but it should

be used as a supporting term rather than the primary one.

- “LangOps” is best reserved for thought leadership,

technical blogs, or when targeting enterprise clients already familiar

with advanced localization operations.

“Translation” is the term

with the greatest and widest reach for LSPs seeking to increase visibility and

attract a broad customer base. It is the industry

standard, the most searched, and the most recognized by both consumers and

businesses. Using “translation” as your primary keyword will maximize your

discoverability and support value-driven messaging for the widest possible audience.

Claude Sonnet 3.7 Summary

Search

Term Frequency Analysis

The Google Trends data shows significant differences in

search interest across the three terms. "Translation consistently maintains the highest search interest, with values ranging from approximately 80-85 on the relative search interest scale (0-100). "Localization shows moderate interest, with values between 30 and 35, while LangOps demonstrates minimal interest, with values hovering around 2-3.

Translation

"Translation" consistently dominates the

search landscape with approximately 2.5 times more search interest than

"localization" and about 40 times more interest than

"LangOps." This term has maintained its strong position throughout

the three years with a slight upward trend.

Localization

"Localization" maintains a consistent

moderate interest level, showing some fluctuation but generally staying within

the 30-35 range on Google's scale. While significantly lower than

"translation," it still demonstrates substantial search volume that

has remained stable over the three years.

LangOps

"LangOps" shows minimal search interest, consistently

remaining below 5 on Google's scale. Despite being positioned as "the

future of scalable, efficient, AI-powered localization" and "the next

evolution of localization," it has not gained significant traction in

search behavior.

Building Value-Oriented Messaging

To build

strong value-oriented messaging:

1.

Lead with "translation" in

primary marketing materials, website headers, and SEO strategy to maximize

visibility and reach.

2.

Differentiate through "localization" by

explaining how your services go beyond basic translation to include cultural

adaptation, which adds significant value.

3.

Introduce "LangOps" as an advanced concept for

enterprise clients, positioning it as the strategic integration of language

services into business operations.

4.

Bridge the terminology gap by explaining how

translation evolves into localization and ultimately into LangOps as part of a

maturity model for global businesses.

Perplexity Pro Summary

This output is essentially the same as the data already

provided above, as it probably draws its answers from these models. However,

this summary seems to be more accommodating of the potential of LangOps, quite

possibly because of the extensive use of the term AI in its descriptive

materials. They also analyzed each term and its implications in more detail.

Strategic

Term Selection for LSPs

Which

Term Provides the Greatest Reach?

For an LSP seeking to increase visibility and reach a

broader customer base, the choice of terminology is strategically important:

"Translation" would provide the widest

potential reach due to its universal recognition. It's the term used by major

platforms like Google, DeepL, and Microsoft[query], indicating its mainstream

appeal.

Advantages:

- Highest search volume and recognition

- Understood by both technical and non-technical audiences

- Already associated with established tools and services

Disadvantages:

- Highly competitive space

- May position services as commoditized

- Doesn't communicate advanced capabilities beyond basic translation

"Localization" represents a middle ground,

with growing recognition and the ability to communicate more sophisticated

services.

Advantages:

- Communicates a more comprehensive approach than

basic translation

- Established industry term with growing recognition

- o Aligns with current industry trends for 2025

- Suggests cultural adaptation and market-specific

expertise

Disadvantages:

- Lower search volume than "translation"

- May not capture the attention of clients seeking basic translation

services

"LangOps" offers cutting-edge positioning but

with significantly limited reach.

Advantages:

- Positions the company at the forefront of industry evolution

- Communicates AI integration and advanced capabilities

- Potential to attract forward-thinking enterprise clients

- Lower competition for the term itself

Disadvantages:

- Very limited search volume and recognition

- Requires explanation to most potential clients

- May alienate clients seeking traditional service

Perplexity Conclusion

For LSPs seeking to maximize market reach and build

value-oriented messaging, "translation" remains the most effective

foundational term due to its universal recognition and substantial search

volume. However, a strategic approach that leverages all three terms at

appropriate stages of the customer journey will likely yield the best results.

As the industry continues to evolve, LSPs should

monitor the relative popularity of these terms and adjust their terminology

strategies accordingly. The rapid growth of "LangOps," despite its

currently smaller search volume, suggests that forward-thinking providers may

benefit from early adoption of this terminology when targeting enterprise

clients seeking comprehensive language solutions.

Ultimately, the most successful terminology strategy

will align with both current search behaviors and the evolving direction of the

industry, positioning LSPs at the intersection of what clients are looking for

today and what they'll need tomorrow.

AI in Enterprise Localization Panel

I was also involved in an interactive panel organized by Johan Sporre with Britta Aagaard, Gaëtan Chrétiennot, Georg Kirchner, and Konstantin

Savenkov, who auto-summarized the session with GPT shown

here. We discussed misconceptions, opportunities,

and the changing role of humans.

Here is the auto-summary:

🔹 AI is not just a better

translation tool. It’s a set of technologies that require the right setup,

people, and processes to work.

🔹

Many AI deployments in the enterprise are not delivering ROI. Localization is

one of the few areas where AI shows clear value—but only when applied with

care.

🔹

Clients now care about language in a new way. That opens the door to

conversations we couldn’t have before—across IT, marketing, and other teams.

🔹

The real work is not about chasing new buzzwords. It’s about understanding

complexity and helping others navigate it.

🔹

Our role is changing—from translation providers to solution architects, guiding

AI through data, process, and purpose.

Also, a shoutout to Marina

Pantcheva, who gave an instructive and entertaining presentation, which somehow managed to make Cleaning Dirty TM sound fun.

Congratulations to Allison Ferch and the GALA team for holding a

successful and substantial conference in such difficult and tumultuous times.