In this time of the pandemic, many experts have pointed out that enterprises that have a broad and comprehensive digital presence are more likely to survive and thrive in these challenging times.

The pandemic has showcased the value of digital operating models and is likely to force many companies to speed up their digital innovation and transformation initiatives. The digital transformation challenge for a global enterprise is even greater, as the need to share content and expand the enterprise's digital presence is

massively multilingual, thus putting it beyond the reach of most localization departments who have a much narrower and much more limited focus.

Thus, today we are seeing that truly global enterprises and agencies have a growing need to make large volumes of flowing content multilingual, to enable communication, problem resolution, collaboration, and knowledge sharing possible, within and without the organization. Most often this needs to be as close to real-time as possible. The greater the enterprise commitment to digital transformation, the greater the need, and urgency. Sophisticated, state-of-the-art machine translation enables multilingual communication and content sharing to happen at scale across many languages in real-time, and is thus becoming an increasingly important core component of enterprise information technology infrastructure. Enterprise tailored MT is now increasingly a must-have for the digitally agile global enterprise.

However, MT is extremely complex and is best handled by focused, well funded, and committed experts who build unique competence over many years of experience. Many in the business translation world dabble with open source tools, and build mostly sub-optimal systems that do not reach the capabilities of generic public systems, and thus create friction and resistance from translators who are well aware of this shortcoming. MT system development still remains a challenge for even the biggest and brightest, and thus, in my opinion, is best left to committed experts.

Given the confidential, privileged and mission-critical nature of the content that is increasingly passing through MT systems today, the issue of data security and privacy is becoming a major factor in the selection of MT systems by enterprises concerned with being digitally agile, but who also wish to ensure that their confidential data is not used by MT technology providers to refine, train, and further improve their MT technology.

While some believe that the only way to accomplish true security is by building your own on-premise MT systems, this task as I have often said, is best left to large companies with well-funded and long-term committed experts. Do-it-yourself (DIY) technology with open source options makes little sense if you don't really know, understand, and follow what you are doing with technology this complex.

It is my feeling that MT is also a technology that truly belongs in the cloud for enterprise use, and also usually makes more sense on mobile devices for consumer use. While in some rare cases, on-premise MT systems do make sense for truly massive scale users like national security government agencies (CIA, NSA) who can appropriate the resources to do it competently, for most commercial enterprise MT provides the greatest ROI when it is delivered and implemented in the cloud by an expert and focused team who do not have to re-invent the wheel. Customization on a robust and reliable expert MT foundation appears to be the optimal approach. MT is also a technology that is constantly evolving as new tools, algorithms, new data, and processes come to light to enable ongoing incremental improvements, and this too suggests that MT is better suited to cloud deployment. Neural MT requires relatively large computing resources, deep expertise, and significant data resources and management capabilities to be viable. All these factors point to MT best being a cloud-based deployment, as it essentially remains a work-in-progress, but I am aware that there are still many who disagree on this, and that the cloud versus on-premise issue is one where it is best to agree to disagree.

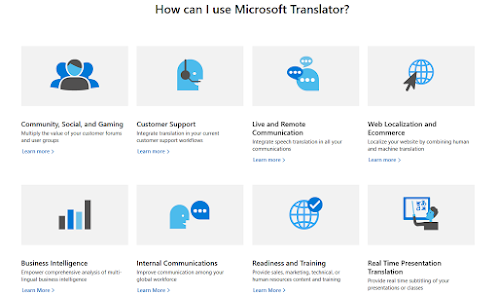

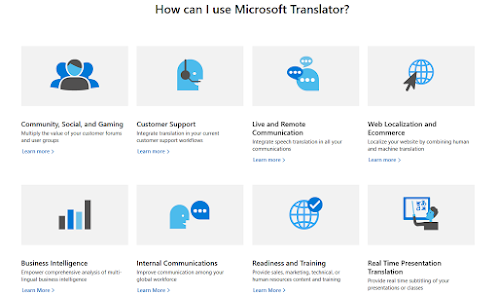

I recently sat down with

Chris Wendt, Group Program Manager and others in his team responsible for Microsoft Translator services, including Bing Translator and Skype Translator. They also connect Microsoft’s research activities with its practical use in services and applications. My intent in our conversation was to specifically investigate, better understand, and clarify the MT data security issues and the many adaptation capabilities that they offer to enterprise customers, as I am aware that the actual facts are often misrepresented, misunderstood, or unclear to many potential users and customers.

Microsoft is a pioneer in the use of MT to serve the technical support information needs of a global customer base, and was the first to make massive support knowledge bases available in MT'd local language for their largest international markets. They were also very early users of Statistical MT (SMT) at scale (tens of millions of words translated for millions of users) and were building actively used systems around the same time that Language Weaver was commercializing SMT. Many of us are aware that the Microsoft Translator services are used both by large enterprises and many LSP agencies in the language services industry because of the relative ease of use, straightforward adaptation capabilities, and relatively low cost. Among the public MT portals, Microsoft is second only to Google in terms of MT traffic, and their consumer platforms solutions on the web and mobile platforms are probably used by millions of users across the world on a daily basis.

It is important to differentiate between Microsoft’s

consumer products and their commercial products when considering the data security policies that are in place when using their machine translation capabilities, as they are quite different.

Consumer Products:

The consumer products are Bing, the Edge browser, and the

Microsoft Translator app for the phone. These products run under the consumer

terms of use, which make it possible for Microsoft to use the processed data

for quality improvement purposes. Microsoft keeps a very small portion of the

data, non-consecutive sentences, and without any information about the customer

who submitted the translation. There is really nothing to learn from performing

a translation. The value only comes when the data is annotated and then used as Test or Training data. The annotation is expensive, so there are only a few

thousand sentences used per language every year, at most.

Some people read the consumer terms of use and assume the same

applies to commercial enterprise products.

That is not the case.

Enterprise Products:

The Translator API is provided via an Azure subscription, which runs under the Azure terms of use.

The Azure terms of use do not allow Microsoft to see any of the data being processed. Azure services generally run as a GDPR processor, and

Translator ensures compliance by not ever writing translated content to persistent storage.

The typical process flow for a submitted translation is as follows:

Decrypt > translate > encrypt > send back > and > forget.

The

Translator API only allows encrypted access, to ensure data is safe in transit. When using the global endpoint, the request will be processed in the nearest available data-center. The customer can also control the specific processing location by choosing a geography-specific endpoint from ten locations which are described here.

Microsoft Translator is certified for compliance

with the GDPR processor and confidentiality rules. It is also compliant

with all of the following:

CSA STAR: The

Cloud Security Alliance (CSA) defines best practices to help ensure a

more secure cloud computing environment, and to helping potential cloud

customers make informed decisions when transitioning their IT operations

to the cloud. The CSA published a suite of tools to assess cloud IT

operations: the CSA Governance, Risk Management, and Compliance (GRC)

Stack. It was designed to help cloud customers assess how cloud service

providers follow industry best practices and standards and comply with

regulations. Translator has received CSA STAR Attestation.

FedRAMP: The

US Federal Risk and Authorization Management Program (FedRAMP) attests

that Microsoft Translator adheres to the security requirements needed

for use by US government agencies. The US Office of Management and

Budget requires all executive federal agencies to use FedRAMP to

validate the security of cloud services. Translator is rated as FedRAMP High in both the Azure public cloud and the dedicated Azure Government cloud.

GDPR:

The General Data Protection Regulation (GDPR) is a European Union

regulation regarding data protection and privacy for individuals within

the European Union and the European Economic Area. Translator is GDPR compliant as a data processor.

HIPAA: The

Translator service complies with the US Health Insurance Portability

and Accountability Act (HIPAA) Health Information Technology for

Economic and the Clinical Health (HITECH) Act, which governs how cloud

services can handle personal health information. This ensures that

health services can provide translations to clients knowing that

personal data is kept private. Translator is included in Microsoft’s HIPAA Business Associate Agreement (BAA).

Health care organizations can enter into the BAA with Microsoft to

detail each party’s role in regard to security and privacy provisions

under HIPAA and HITECH.

HITRUST: The

Health Information Trust Alliance (HITRUST) created and maintains the

Common Security Framework (CSF), a certifiable framework to help

healthcare organizations and their providers demonstrate their security

and compliance in a consistent and streamlined manner. Translator is HITRUST CSF certified.

PCI:

Payment Credit Industry (PCI) is the global certification standard for

organizations that store, process or transmit credit card data. Translator is certified as compliant under PCI DSS version 3.2 at Service Provider Level 1.

SOC: The

American Institute of Certified Public Accountants (AICPA) developed

the Service Organization Controls (SOC) framework, a standard for

controls that safeguard the confidentiality and privacy of information

stored and processed in the cloud, primarily in regard to financial

statements. Translator is SOC type 1, 2, and 3 compliant.

US Department of Defense (DoD) Provisional Authorization:

US DoD Provisional Authorization enables US federal government customers to deploy highly sensitive data on in-scope Microsoft government cloud services. Translator is rated at Impact Level 4 (IL4) in the government cloud.

Impact Level 4 covers Controlled Unclassified Information and other mission-critical data. It may include data designated as For Official

Use Only, Law Enforcement Sensitive, or Sensitive Security Information.

ISO: Translator

is ISO certified with five certifications applicable to the service.

The International Organization for Standardization (ISO) is an

independent nongovernmental organization and the world’s largest

developer of voluntary international standards. Translator’s ISO certifications demonstrate its commitment to providing a consistent and secure service. Translator’s ISO certifications are:

- ISO 27001 Information Security Management Standards

- ISO 9001:2015 Quality Management Systems Standards

- 27018:2014 Code of Practice for Protecting Personal Data in the Cloud

- 20000-1:2011: Information Technology Service Management

- ISO 27017:2015: Code of Practice for Information Security Controls

The Translator service is subject to annual audits on all of its certifications to ensure the service continues to be compliant.

These

standards force Microsoft to review every change to the live site with

two employees, and to enforce minimal access to the runtime environment,

as well as having processes in place to protect against external

attacks on the data center hardware and software. The standards that

Microsoft Translator is certified for, or compliant with, include

specific ones for the financial industry and health care providers.

Different

from the content submitted for translation, the documents the customer

uses to train a custom system are stored on a Microsoft server.

Microsoft doesn’t see the data and can’t use it for any purpose other

than building the custom system. The customer can delete the custom

system as well as the training data at any time, and there won’t be any

residue of the training data on any Microsoft system after deletion, or

after account expiration.

Translation

in Microsoft’s other commercial products like Office, Dynamics, Teams,

Yammer, SharePoint, and others follow the same data security rules described above.

Chris also mentioned that, "German customers have been very hesitant to recognize that trustworthy translation in the cloud is possible, for most of the time I have been working on Translator, and I am glad to see now that even the Germans are now warming up to the concept of using translation in the cloud." He pointed me to a VW case study where

I found the following quote, and also rationale on the benefits of

a cloud-centric translation service to a global enterprise that seeks

to enable and enhance multilingual communication, collaboration and

knowledge sharing. A deciding factor for the team responsible at VW [in

selecting Microsoft] was that none of the data – translation memories,

documents to be translated, and trained models – was to leave the

European Union (EU) for data protection reasons.

“Ultimately,

we expect the Azure environment to provide the same data security as

our internal translation portal has offered thus far,”

Tibor

Farkas, Head of IT Cloud at Volkswagen

Chris

closed with a compelling statement, pointing to the biggest data security

problem that exists in business translation: incompetent implementation. Cloud services properly implemented can be as secure as any connected on-premise solution, and in my opinion the greatest risk is often introduced by untrustworthy or careless translators who interact with MT systems, or incompetent IT staff that maintain an MT portal as the Translate.com fiasco showed. .

"Your

readers may want to consider whether their own computing facilities are

equally well secured against data grabbing and whether their language

service provider is equally well audited and secured. It

matters which cloud service you are using, and how the cloud service protects your data."

While I have not focused much on the speech-to-text issue in this post, we should understand that Microsoft also offers SOTA (state-of-the-art) speech-to-text capabilities and that the Skype and Phone app experience also gives them a leg up on speech-related applications that go across languages.

I also gathered some interesting information on the Microsoft Translator customization and adaptation capabilities and experience. I will write a separate post on that subject once I gather a little more information on the matter.