This is a guest post by Mats Linder on the data privacy and security issues around the use of public MT services in professional translator use scenarios.

As I put this post together, I can hear Mark Zuckerberg giving his testimony on Capitol Hill to shockingly ignorant questions from legislators who don't really have a clue. This is not so different from the naive and somewhat ignorant comments I also see in blogs in the translation industry, on the data privacy issue with MT. The looming deadlines of the GDPR legislation have raised the volume of discussion on the privacy issue, but unfortunately not the clarity. GDPR will now result in some companies being fined, and since there is a possibility to calculate what it costs not to do it right, many companies are being much more careful, at least in Europe. But as the Guardian said: " If it’s rigorously enforced (which could be a big “if” unless data protection authorities are properly resourced) it could blow a massive hole in the covert ad-tracking racket – and oblige us to find less abusive and dysfunctional business models to support our online addiction."

As the Guardian wrote recently:

"This is what security guru Bruce Schneier meant when he observed that “surveillance is the business model of the internet”. The fundamental truth highlighted by Schneier’s aphorism is that the vast majority of internet users have entered into a Faustian bargain in which they exchange control of their personal data in return for “free” services (such as social networking, [MT], and search) and/or easy access to the websites of online publications, YouTube and the like.

Big though Facebook is, however, it’s only the tip of the web iceberg. And it’s there that change will have to come if the data vampires are to be vanquished. "

In our current online world, only the paranoid thrive.

Richard Stallman, President of the Fress Software Foundation had this to say:

"To restore privacy, we must stop surveillance before it even asks for consent.

Finally, don’t forget the software on your own computer. If it is the non-free software of Apple, Google or Microsoft, it spies on you regularly. That’s because it is controlled by a company that won’t hesitate to spy on you. Companies tend to lose their scruples when that is profitable. By contrast, free (libre) software is controlled by its users. That user community keeps the software honest."

Apparently, there is a special term for this kind of data acquisition and monitoring effort. Shoshana Zuboff calls this surveillance capitalism.

Also here is Valeria Maltoni on this issue:

"Breaches expose information the other way. They shine a light on the depth and breadth of data gathering practices — and on the business models that rely on them. Awareness changes the perception of knowledge and its use. Anyone not living under a rock now is aware that we likely don't know all the technical implications, but we know enough to start making different decisions on how we browse and communicate online.

Business models are the most problematic, because they create dependency on data and an incentive to collect as much as possible. Beyond advertising, lack of transparency on third party sharing and usage merit further scrutiny. Perhaps the time has come to evolve business practices — how platforms and people interact — and standards — based on laws and regulations"

So when I read about how Google says in a FAQ no less, that they really with all their little heart promise not to use your data, or when Microsoft tells me they have a "No-trace Policy" about your MT data I am more than a little skeptical. Especially, when just last week I get an email from Microsoft about an update to the Terms of the Service Agreement which contains some big updates in clause 2 related to what they can do with "Your Content".

While some may feel that it is possible to trust these companies I remain unconvinced and suggest that you consider the following:

- What are the Terms of Service agreement governing your use of the MT service? (not the FAQ or some random policy page).The only legally enforceable contract an MT user has is what is stated in the TOS and I would not be surprised if there are not several loopholes in there as well.

Once a large web services company sets a data harvesting ad-supported infrastructure in motion, it is not easily turned off, and while it is possible there may be more privacy in the EU, I have already seen that Google has made it very clear that they are using my data every time I use the Google Translate service. So my advice to you is Caveat Emptor if it really matters that your data privacy is intact. But if you send your translation content back and forth via email it does not make any differnece anyway. Does it?

Mats has made a valiant attempt to wade through the vague and ambiguous legalese that surrounds the use of these, mostly ad-supported MT services, in his post below.

=======

How (un)safe is machine translation?

Some time ago there were a couple of posts on this site discussing data security risks with machine translation (MT), notably

by Kirti Vashee and

by Christine Bruckner. Since they covered a lot of ground and might have created some confusion as to what security options are offered, I believe it may be useful to take a closer look with a more narrow perspective, mainly from the professional translator’s point of view. And although the starting point is the plugin applications for SDL Trados Studio, I know that most of these plugins are available also for other CAT tools.

About half a year ago, there was an uproar about Statoil’s discovery that some confidential material had become publicly available due to the fact that it had been translated with the help of a site called translate.com (not to be confused with translated.net, the site of the popular MT provider MyMemory). The story was reported in several places;

this report gives good coverage.

Does this mean that all, or at least some, machine translation runs the risk of compromising the material being translated? Not necessarily – what happened to Statoil was the result of trying to get something for nothing; i.e. a free translation. The same thing happens when you use the free services of Google Translate and Microsoft’s Bing. Frequently quoted terms of use for those services state, for instance, that “you give Google a worldwide license to use, host, store, reproduce - - - such content”, and (for Bing): “When you share Your Content with other people, you understand that they may be able to, on a worldwide basis, use, save, record, reproduce - - - Your Content without compensating you”. This should indeed be off-putting to professional translators but should not be cited to scare them from using services for which those terms are not applicable.

The principle is this: If you use a free service, you can be almost certain that your text will be used to “improve the translation services provided”; i.e. parts of it may be shown to other users of the same service if they happen to feed the service with similar source segments. However, the terms of use of Google’s and Microsoft’s paid services – Google Cloud Translate API and Microsoft Text Translator API – are totally different from the free services. Not only can you select not to send back your finalized translations (i.e. update the provider’s data with your own translations); it is in fact not possible – at least not if you use Trados Studio – to do so.

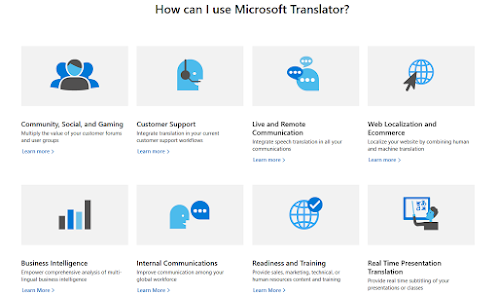

Google and Microsoft are the big providers of MT services, but there are a number of others as well (MyMemory, DeepL, Lilt, Kantan, Systran, SDL Language Cloud…). In essence, the same principle applies to most of them. So let us have a closer look at how the paid services differ from the free.

Google’s and Microsoft’s paid services

Google states, as a reply to the question

Will Google share the text I translate with others: “We will not make the content of the text that you translate available to the public, or share it with anyone else, except as necessary to provide the Translation API service. For example, sometimes we may need to use a third-party vendor to help us provide some aspect of our services, such as storage or transmission of data. We won’t share the text that you translate with any other parties, or make it public, for any other purpose.”

And here is the reply to the question after that,

Will the text I send for translation, the translation itself, or other information about translation requests be stored on Google servers? If so, how long and where is the information kept?: “When you send Google text for translation, we must store that text for a short period of time in order to perform the translation and return the results to you. The stored text is typically deleted in a few hours, although occasionally we will retain it for longer while we perform debugging and other testing. Google also temporarily logs some metadata about translation requests (such as the time the request was received and the size of the request) to improve our service and combat abuse. For security and reliability, we distribute data storage across many machines in different locations.”

For Microsoft Text Translator API the information is more straightforward, on

their “API and Hub: Confidentiality” page: “Microsoft does not share the data you submit for translation with anybody.” And on

the "No-Trace" page: “Customer data submitted for translation through the Microsoft Translator Text API and the text translation features in

Microsoft Office products are not written to persistent storage. There will be no record of the submitted text, or portion thereof, in any Microsoft data center. The text will not be used for training purposes either. –

Note: Known previously as the “no trace option”, all traffic using the Microsoft Translator Text API (free or paid tiers) through any Azure subscription is now “no trace” by design. The previous requirement to have a minimum of 250 million characters per month to enable No-Trace is no longer applicable. In addition, the ability for Microsoft technical support to investigate any Translator Text API issues under your subscription is eliminated.”

Other major players

As for

DeepL, there is the same difference between free and paid services. For the former, it is stated – on

their "Privacy Policy DeepL" page, under

Texts and translations – DeepL Translator (free) – that “If you use our translation service, you transfer all texts you would like to transfer to our servers. This is required for us to perform the translation and to provide you with our service. We store your texts and the translation for a limited period of time in order to train and improve our translation algorithm. If you make corrections to our suggested translations, these corrections will also be transferred to our server in order to check the correction for accuracy and, if necessary, to update the translated text in accordance with your changes. We also store your corrections for a limited period of time in order to train and improve our translation algorithm.”

To the paid service, the following applies (stated on the same page but under

Texts and translations – DeepL Pro): “When using DeepL Pro, the texts you submit and their translations are never stored, and are used only insofar as it is necessary to create the translation. When using DeepL Pro, we don't use your texts to improve the quality of our services.” And interestingly enough, DeepL seems to consider

their services to fulfill the requirements stipulated – currently as well as in the coming legislation – by the EU Commission (see below).

Lilt is a bit different in that it is free of charge, yet applies strict

Data Security principles: “Your work is under your control. Translation suggestions are generated by Lilt using a combination of our parallel text and your personal translation resources. When you upload a translation memory or translate a document, those translations are only associated with your account. Translation memories can be shared across your projects, but they are not shared with other users or third parties.”

MyMemory – a very popular service which in fact is also free of charge, even though they use the paid services of Google, Microsoft, and DeepL (but you cannot select the order in which those are used, nor can you opt out from using them at all) – uses also its own translation archives as well as offering the use of the translator’s private TMs. Your own TM material cannot be accessed by any other user, and as for MyMemory’s own archive, this is what they say, under

Service Terms and Conditions of Use:

“We will not share, sell or transfer ’Personal Data’ to third parties without users' express consent. We will not use ’Private Contributions’ to provide translation memory matches to other MyMemory's users and we will not publish these contributions on MyMemory’s public archives. The contributions to the archive, whether they are ’Public Data’ or ’Private Data’, are collected, processed and used by Translated to create statistics, set up new services and improve existing ones.” One question here is of course what is implied by “improve” existing services. But MyMemory tells me that it means training their machine translation models, and that source segments are never used for this.

And this is what the

SDL Language Cloud privacy policy says: “SDL will take reasonable efforts to safeguard your information from unauthorized access. – Source material will not be disclosed to third parties. Your term dictionaries are for your personal use only and are not shared with other users using SDL Language Cloud. – SDL may provide access to your information if SDL plc believes in good faith that disclosure is reasonably necessary to (1) comply with any applicable law, regulation or legal process, (2) detect or prevent fraud, and (3) address security or technical issues.”

Is this the whole truth?

Most of these terms of services are unambiguous, even Microsoft’s. But Google’s leaves room for interpretation – sometimes they “may need to use a third-party vendor to help us provide some aspect of [their] services”, and occasionally they “will retain [the text] for longer while [they] perform debugging and other testing”. The statement from MyMemory about improving existing services also raises questions, but I am told that this means training their machine translation models, and that source segments are never used for this. However, since MyMemory also utilizes Google Cloud Translate API (and you don’t know when), you need to take the same care with both MyMemory and Google.

There is also the problem with companies such as Google and Microsoft that you cannot get them to reply to questions if you want clarifications. And it is very difficult to verify the security provided, so that the “trust but verify” principle is all but impossible to implement (and not only with Google and Microsoft).

Note, however, that there are plugins for at least the major CAT tools that offer possibilities to anonymize (mask) data in the source text that you send to the Google and Microsoft paid services, which provides further security. This is also to some extent built into the MyMemory service.

But even if you never send back your translated target segments, what about the source data that you feed into the paid services? Are they deleted, or are they stored so that another user might hit upon them even if they are not connected to translated (target) text?

Yes and no. They are generally stored, but – also generally – in server logs, inaccessible to users and only kept for analysis purposes, mainly statistical. Cf. the statement from MyMemory.

My conclusion, therefore, is that as long as you do not return your own translations to the MT provider, and you use a paid service (or Lilt), and you anonymize any sensitive data, you should be safe. Of course, your client may forbid you to use such services anyway. If so, you can still use MT but offline; see below.

What about the European Union?

Then there is the particular case of translating for the European Union, and furthermore, the provisions in the General Data Protection Regulation (GDPR), to enter into force on 25 May 2018. As for EU translations, the European Commission uses the following clause in their

Tender specifications:

”Contractors intending to use web-based tools or any other web-based service (e.g. cloud computing) to execute the /framework contract/ must ensure full compliance with the terms of this call for tenders when using such services. In particular, the provisions on confidentiality must be respected throughout any web-based process and the Union's intellectual and industrial property rights must be safeguarded at all times.” The commission considers the scope of this clause to be very broad, covering also the use of web-based translation tools.

A consequence of this is that translators are instructed not to use “open translation services” (beggars definition, does it not?) because of the risk of losing control over the contents. Instead, the Commission has its own MT-system, e-Translation. On the other hand, it seems possible that the DG Translation is not quite up-to-date as concerns the current terms of service – quoted above – of Google Cloud Translate API and Microsoft Text Translation API, and if so, there may be a slight possibility that they might change their policy with regard to those services. But for now, the rule is that before a contractor uses web-based tools for an EU translation assignment, an authorisation to do so must be obtained (and so far, no such requests have been made).

As for the GDPR, it concerns mainly the protection of personal data, which may be a lesser problem generally for translators. In the words of Kamocki & Stauch on p. 72 of

Machine Translation, “The user should generally avoid online MT services where he wishes to have information translated that concerns a third party (or is not sure whether it does or not)”.

Offline services and beyond

There are a number of MT programs intended for use offline (as plugins in CAT tools), which of course provides the best possible security (apart from the fact that transfer back and forth via email always constitutes a theoretical risk, which some clients try to eliminate by using specialized transfer sites). The drawback – apart from the fact that being limited to your own TMs – is that they tend to be pretty expensive to purchase.

The ones that I have found (based on investigations of plugins for SDL Trados Studio) are, primarily,

Slate Desktop translation provider,

Transistent API Connector, and

Tayou Machine Translation Plugin. I should add that so far in this article I have only looked at MT providers which are based on providers of statistical machine translation or its further development, neural machine translation. But it seems that one offline contender which for some language combinations (involving English) also offers pretty good “services” is the rule-based

PROMT Master 18.

However, in conclusion I would say that if we take the privacy statements from the MT providers at face value – and I do believe we can, even when we cannot verify them – then for most purposes the paid translation services mentioned above should be safe to use, particularly if you take care not to pass back your own translations. But still, I think both translators and their clients would do well to study the risks described and advice given by Don DePalma in

this article. Its topic is free MT, but any translation service provider who wants to be honest in the relationship with the clients, while taking advantage of even paid MT, would do well to study it.

Mats Dannewitz Linder has been a freelance translator, writer and editor for the last 40 years alongside other occupations, IT standardization among others. He has degrees in computer science and languages and is currently studying national economics and political science. He is the author of the acclaimed Trados Studio Manual and for the last few years has been studying machine translation from the translator’s point of view, an endeavour which has resulted in several articles for the Swedish Association of Translators as well as an overview of Trados Studio apps/plugins for machine translation. He is self-employed at Nattskift Konsult.